Multi-persona LLM System

A decision-support system with LLM-generated debates

HIGHLIGHTS

This project investigates how multi-persona large language models (LLMs) can reduce confirmation bias in users’ information-seeking behavior. I designed, implemented, and evaluated a debate-style conversational system where AI personas represent diverse viewpoints on controversial topics. The goal was to explore whether structured, multi-perspective interactions can promote balanced reasoning and critical thinking during information search.

Role: Lead Researcher (System Design, Experimental Design, UX Evaluation, Data Analysis)

Timeline: August 2023 - September 2025

Collaborators: Houjiang Liu, Dan Zhang, Dr. Jacek Gwizdka, Dr. Matt Lease

RESEARCH GOALS

How do users experience multi-persona debates compared to standard search interfaces?

Can AI-driven debates help users engage with counter-attitudinal content?

Do such systems influence users’ belief certainty and reduce bias?

RESEARCH APPROACH

To address these goals, I designed a mixed-method experiment to explore how system design influences user cognition and judgment:

Controlled User Experiment: A within-subjects study comparing users’ engagement and belief change when interacting with multi-persona LLM debates versus baseline search results.

Behavioral Logging: Recorded user interactions (persona selections, conversation turns, time on task) to model engagement patterns.

Eye-Tracking & Attention Analysis: Captured visual attention to argument quality, stance polarity, and credibility cues across debate rounds.

Survey & Interview Feedback: Pre/post belief measures and semi-structured interviews provided qualitative insights on reasoning and user trust.

This mixed-methods approach connected observable attention behaviors with subjective cognitive outcomes, allowing actionable UX insights on how interactive debate framing can mitigate bias.

SYSTEM DESIGN

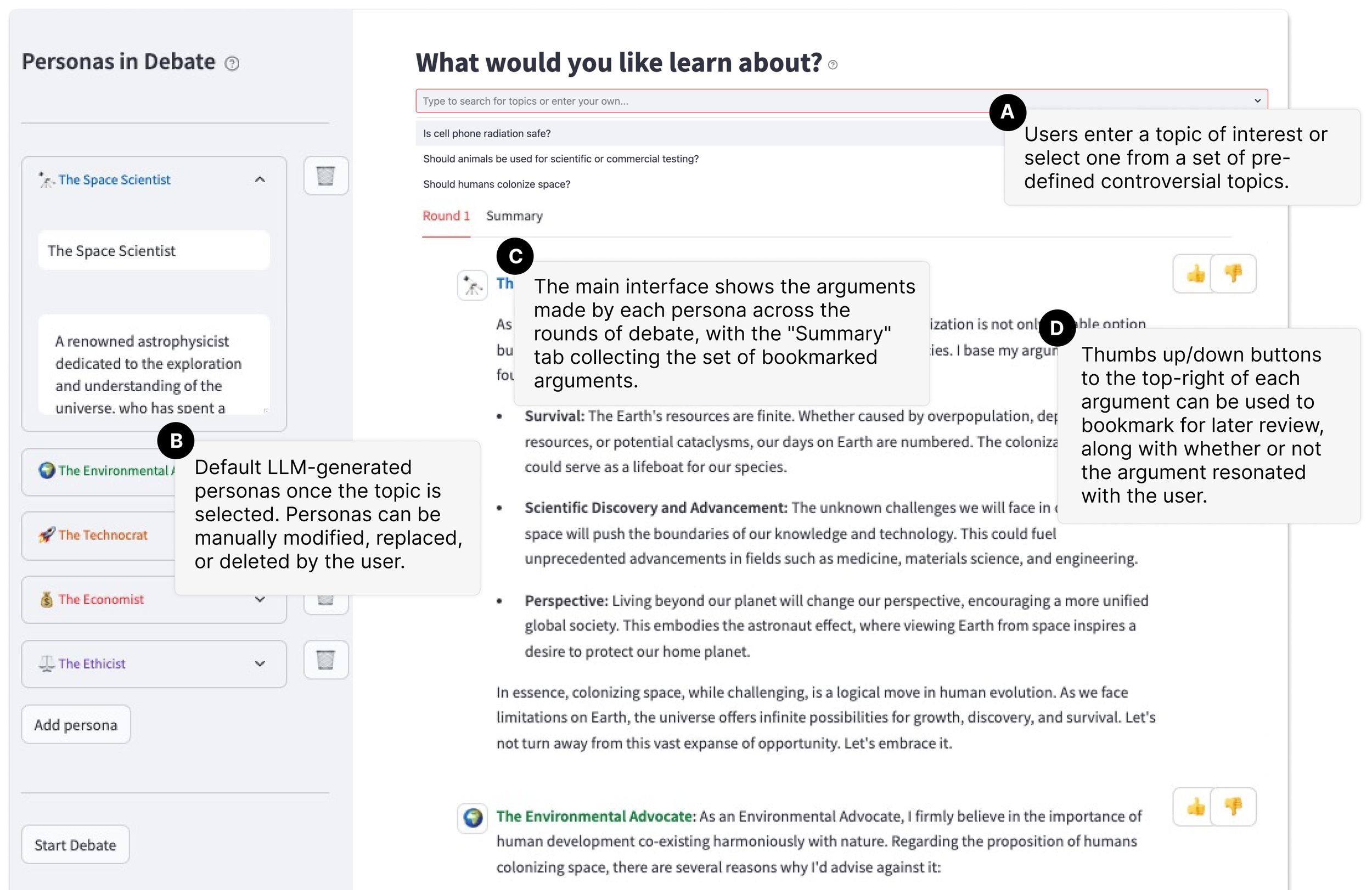

Persona Creation: LLM generates diverse AI agents with relevant backgrounds (e.g., scientist, activist, policymaker) via structured prompts balancing pro & con viewpoints.

Debate Interface: Sequential argument flow, color-coded personas, interactive persona selection and customization, content bookmarking (“thumbs-up/down”), and summary review panel.

Design Objectives:

Ensure broad viewpoint representation across AI personas

Create interactive, dynamic debates that mirror human argumentation

Foster user engagement and reflection through active participation

Core Design Features

Persona Generation: GPT-4 creates diverse personas (e.g., scientist, environmentalist, policymaker) through balanced prompt design

Debate Interaction: Sequential persona-to-persona dialogue with color-coded identifiers for easy tracking

User Feedback Tools: Users bookmark arguments and revisit them in a Summary view to reflect on changing opinions

Balance Assurance: Built-in prompts maintain ideological diversity and prevent bias reinforcement

The interface allows users to select or edit personas, observe debates, and bookmark arguments they agree or disagree with.

EVALUATION

To assess the impact of multi-persona debates on user engagement and bias reduction, I conducted an in-lab study comparing the Multi-Persona Debate System with a retrieval-based baseline (ArgumentSearch).

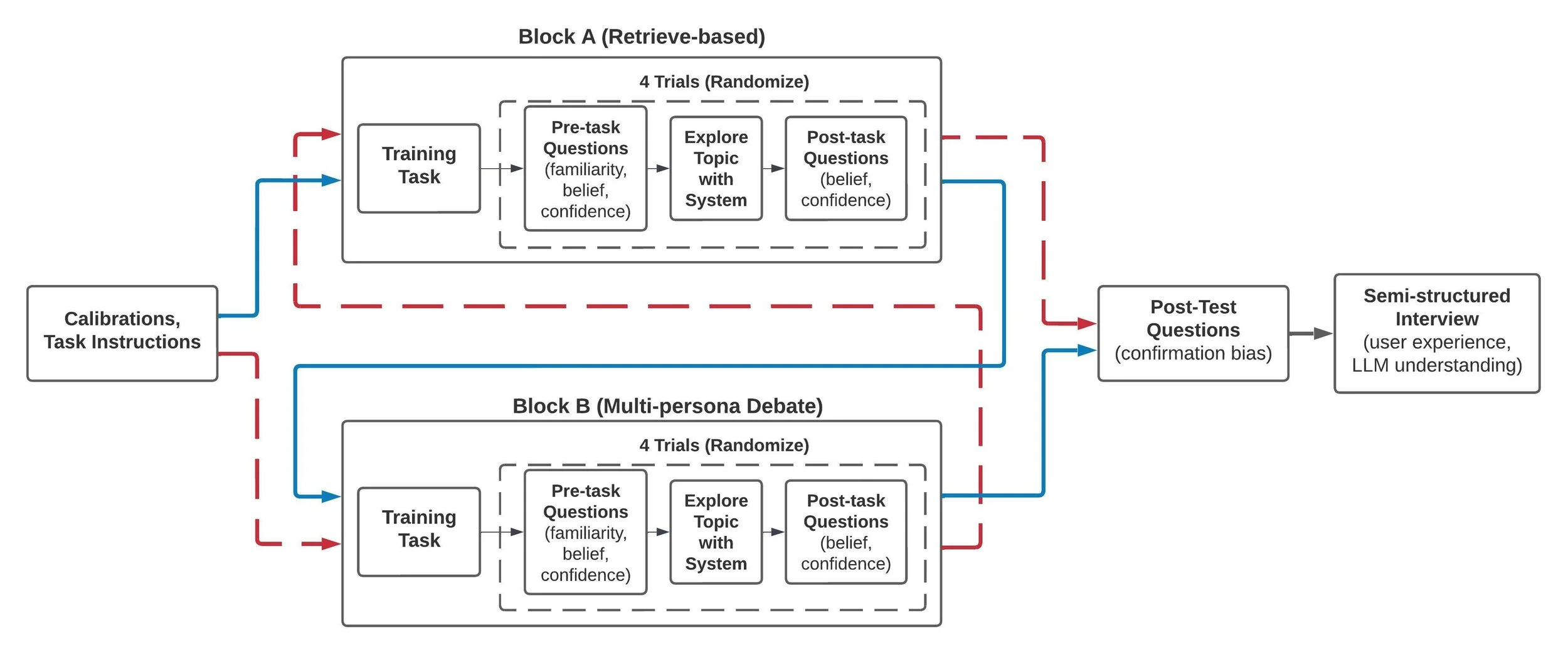

Flowchart of the experimental procedure (A/B testing).

Study Design

Type: Within-subjects laboratory experiment comparing Multi-Persona Debate System vs. Baseline ArgumentSearch interface.

Participants: 40 adults (balanced across gender and domain knowledge).

Duration: ~75 minutes per participant (two experimental blocks + post-study interview).

Tasks:

Read task prompt on a controversial topic.

Interact with either debate system or baseline search results.

Rate agreement with the claim before and after interaction.

Complete usability and cognitive reflection questionnaires.

Measures Collected

Behavioral: Interaction time, number of debate rounds generated, argument bookmarks.

Eye-tracking: Fixation duration, gaze transitions between pro/con arguments, attention bias toward confirmation or disconfirmation evidence.

Cognitive: Pre/post belief shifts, self-reported engagement, and perceived credibility of sources.

Analysis Approach

Quantitative: Repeated-measures ANOVA for fixation, engagement, and belief change metrics.

Qualitative: Thematic coding of interview responses on user reflection and perceived usefulness.

Clustering: Interaction patterns grouped into behavioral archetypes (Active Observers, Explorers, Critical Readers).

This evaluation framework directly linked interaction behaviors, attention allocation, and belief change, revealing how debate design affects reflective reasoning.

KEY FINDINGS

1.Reduced Confirmation Bias through Active Exposure

Users spent ~27% longer fixation time on arguments opposing their prior beliefs, indicating increased cognitive engagement.

Participants showed lower post-task confidence in their initial stances, suggesting more open-minded reflection.

2. Three Engagement Patterns Identified

Active Observers: Regularly used bookmarking, revisited arguments frequently.

Multi-Round Explorers: Generated multiple debate rounds but initially favored familiar stances.

Critical Readers: Engaged longest, edited personas to balance perspectives, and explored counterarguments deeply.

3. Observable Belief Moderation

After interacting with the debate system, 18% more participants shifted toward neutral or balanced views compared with the baseline.

Participants described debates as “more engaging than static search results” and said it helped them “see why others think differently.”

4. Design Implications

Debate framing fosters empathy, curiosity, and balanced reasoning.

Persona diversity cues (color and stance labeling) improved comprehension and reduced overload.

User feedback inspired a Condensed Debate Summary feature for quick reflection after long debates.